Category: Longform

You are viewing all posts from this category, beginning with the most recent.

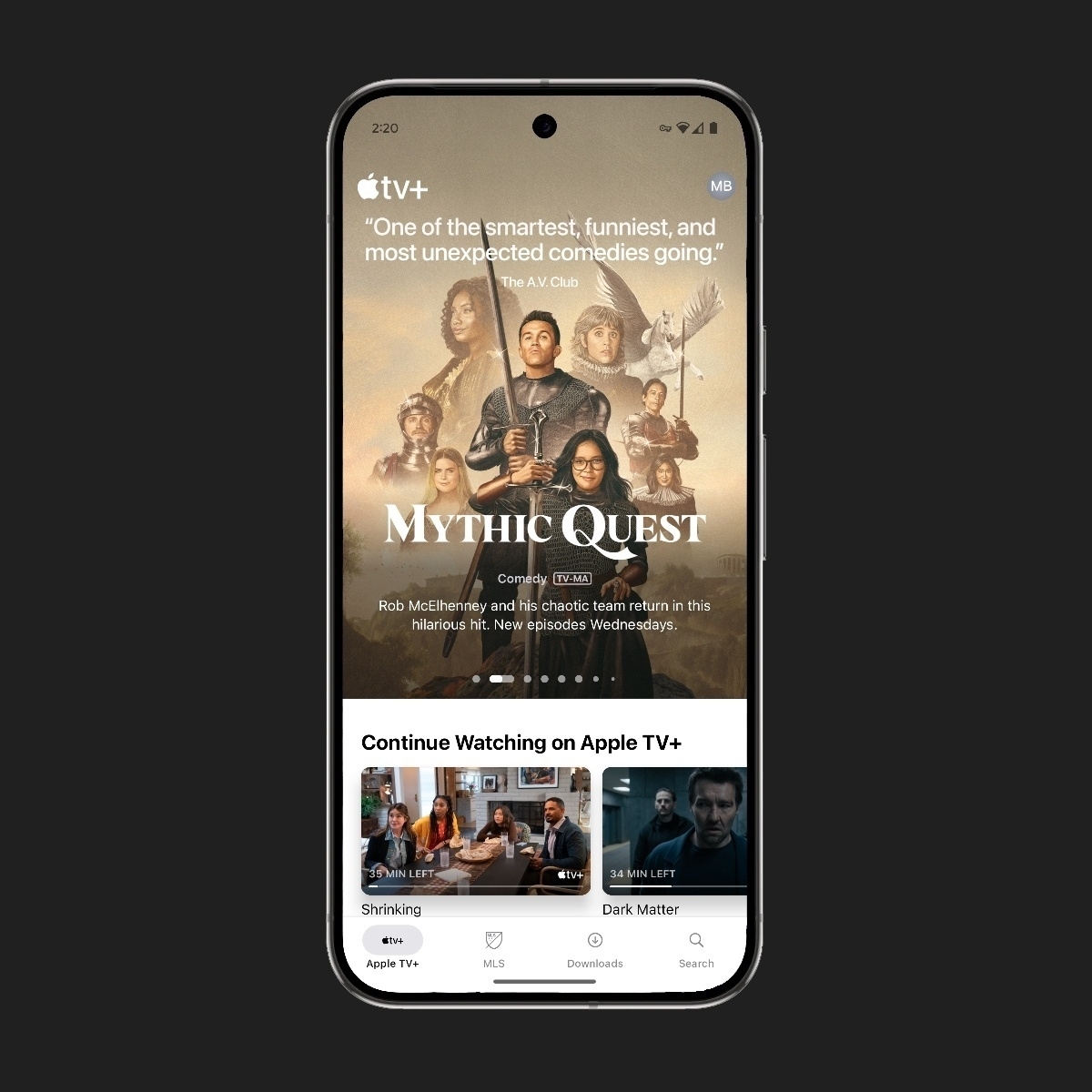

Apple TV app on Android is just an Apple TV+ app

Right off the bat, the app requires an Apple ID for login, consistent with Apple’s approach to most of their other apps, including their Apple Music approach on Android. While Apple will likely cite “privacy” as the reason 🙄

A significant drawback is the absence of previously purchased Apple TV movies and shows within the app. This feels like a missed opportunity, perhaps intentionally left as an incentive for users to remain within Apple’s ecosystem, even with Apple TV Plus now available on Android.

The “About this app” section on the Apps Play Store page says the following:

Watch exclusive shows and movies on Apple TV+ and stream live sports With the Apple TV app, you can:

Watch exclusive, award-winning Apple Originals shows and movies on the streaming service Apple TV+. Enjoy thrilling dramas like Presumed Innocent and Bad Sisters, epic sci-fi like Silo and Severance, heartwarming comedies like Ted Lasso and Shrinking, and can’t-miss blockbusters like Wolfs and The Gorge. New releases every week, always ad-free.

Also included with your Apple TV+ subscription is Friday Night Baseball, featuring two live MLB games every week throughout the regular season.

Stream live soccer matches on MLS Season Pass, giving you access to the entire MLS regular season-including every time Lionel Messi takes the pitch-and each playoff and Leagues Cup clash, all with no blackouts.

Access the Apple TV app everywhere-it’s on your favorite Apple and Android devices, streaming platforms, smart TVs, gaming consoles, and more.

The Apple TV app makes watching TV easier:

Continue Watching helps you pick up where you left off seamlessly across all your devices.

Add movies and shows to Watchlist to keep track of everything you want to watch later.

Stream it all over Wi-Fi or with a cellular connection, or download to watch offline. The availability of Apple TV features, Apple TV channels

Despite the limitation of not being able to access your library of movies and TV shows in the app, the app is generally well-designed. Even if it utilizes Apple’s iOS design language rather than Material UI/UX. I’m not surprised by that.

On Android, the interface feels intuitive. Overall, I guess it’s fine app. One big glaring issue is that it doesn’t support casting. Just about every movie and TV streaming app supports Cast. So that’s stupid.

Google Unifies Android Development for XR, Enabling Camera Access

Google is doing the right thing by bringing all of the Android development features from phones to Android XR, and one big question developers had was about using the pass-through cameras on Android XR headsets. It appears they are, based on an recent email exchange that a VR developer, Skarred Ghost had with a Google spokesperson, confirming that Android XR developers will have access to camera functionalities. The spokesperson clarified that developers can utilize existing camera frames with user permission, similar to any other Android app. Specifically, developers can request access to the world-facing (rear) camera stream using camera_id=0 and the selfie-camera (front) stream using camera_id=1 through standard Android Camera APIs (Camera2 and CameraX). Access to the world-facing camera requires standard camera permissions, just like on phones. For the selfie-camera, developers receive an image stream of the user’s avatar, generated by avatar provider apps/services based on user tracking data from inward-facing cameras. The spokesperson emphasized that Android developers can use the same camera management classes (like CameraX) on Android XR headsets as they do on phones, enabling functionalities like grabbing frames and videos, saving media, and running machine learning analysis.

This shows that Google and the Android team have not only learned from their time and experience with Android handsets, TVs, and wearables, but they have also created an ecosystem of developable tools that will allow developers to create one app for all platforms instead of separate apps for each platform. This is a significant advantage, creating a great experience for developers across many different platforms, especially when they utilize Jetpack libraries for a cohesive UI experience across Android hardware.

This move by Google signals a bright future for Android XR development. The unification of the platform, coupled with camera access, empowers developers and paves the way for a new generation of immersive experiences.

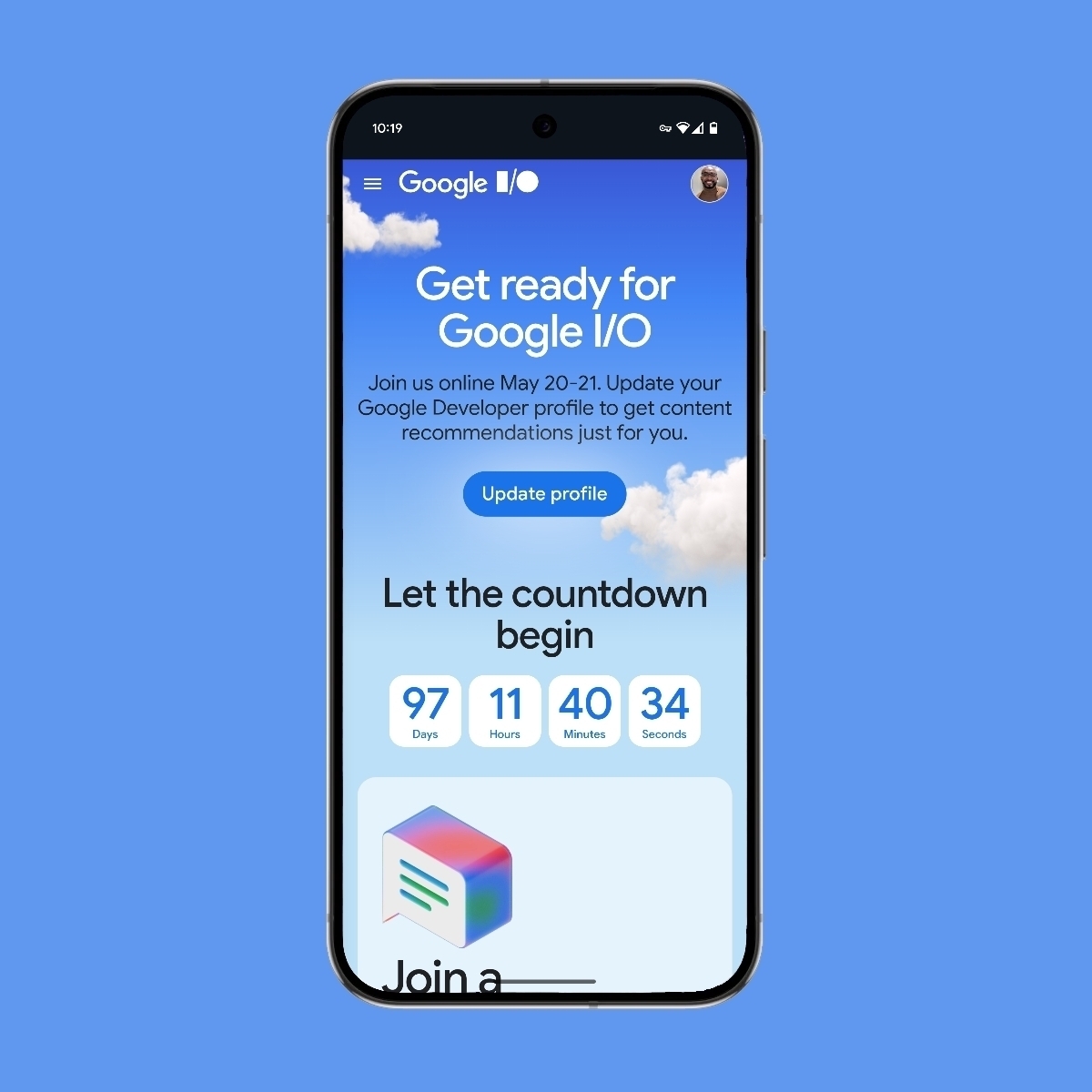

Let The Countdown Begin: Google I/O 2025

Google I/O, the annual developer conference, is set to take place at Shoreline on May 20-21st! Registration is currently open, and tickets are being distributed in phases. I’ve already secured my badge for my fifth I/O event!

While the event schedule typically isn’t released until a couple of weeks prior, I expect this year’s I/O to be heavily focused on AI, offering developers new tools to improve their native and web applications. Last year, Gemini was unveiled piece by piece, showcasing its capabilities across Android, ChromeOS, and the Web.

Android XR will likely have a more prominent role this year, especially after the recent unveiling of its software and hardware at Samsung’s Galaxy Unpacked event. I’m particularly excited about Project Astra and the UI/UX for the Android XR glasses, which were teased earlier this year.

The Pixel will undoubtedly take center stage. The Pixel 9a should be released by then, and we might even get a sneak peek at the Pixel 10 series. There have also been rumors about Android making its way to laptops, given that the ChromeOS and Android teams are now under the same Platform and Services department.

As you can see, there’s a lot to look forward to at Google I/O. AI will continue to be a central theme, and I’m eager to see what innovations are unveiled.

AI's Promise: Insights from the AI Summit in Paris

We’re at a fascinating moment with Artificial Intelligence. It feels like everyone’s talking about it, some with excitement, others with a bit of trepidation. It reminds me of something Pharrell Williams said at the recent AI Summit in Paris:

“The internet in the ’90s…people were genuinely worried.”

He’s right. By doing a little research and seeing how people viewed the internet back then, I’ve gotten a good understanding of just how worried many were. Some marveled at the instant access to information, while others worried about its impact. It’s funny, isn’t it? Fast forward to today, and it’s hard to imagine life without it. We’re at a similar inflection point with AI, and honestly, I think that’s what makes events like the AI Summit in Paris so important. They bring together people from all over the world—researchers, tech companies, everyday consumers—and spark a global conversation about what AI can achieve. This isn’t about robots taking over; it’s about AI becoming a powerful partner, accelerating human ingenuity and ushering in a new era of innovation. And this vision was echoed by Sundar Pichai, who emphasized the incredible potential of AI to address some of humanity’s greatest challenges.

I think one of the biggest hurdles AI faces is perception. We’ve all seen the movies—the iRobot antagonist, the Matrix scenarios—and it’s easy to fall into the trap of thinking AI is inherently malicious, destined to take our jobs and turn us into batteries. But like most things in life, there’s a good side to this. Just like any tool, AI can be used for good or bad. And at the AI Summit, we saw so many examples of the good it can do.

AI isn’t a sudden invention; it’s the next logical step in our technological evolution. Just as we moved from basic computing to the internet, and then to mobile, AI is now poised to become ubiquitous. Imagine a “universal AI assistant” – seamlessly integrated into your Android phone, your Google Workspace, your smart home, and beyond. This is the vision behind concepts like “Project Astra”: AI that understands context, anticipates needs, and assists you across all aspects of your life. It’s not just a feature; it’s a fundamental shift in how we interact with technology.

AI’s real magic? It’s all about collaboration. And science is where we’re seeing some truly mind-blowing breakthroughs. Consider AlphaFold, a super-smart AI from DeepMind, that can predict how proteins are shaped with incredible accuracy. Proteins are like the building blocks of life, and knowing their shape is key to understanding diseases and developing new treatments. Researchers estimate that AlphaFold has saved years of research time, not just days or weeks. Over a million researchers worldwide have used AlphaFold, accessing over 200 million protein structure predictions, accelerating research in areas from drug discovery to combating antibiotic resistance. Building on this success, Isomorphic Labs is using machine learning to revolutionize drug design, potentially shortening development timelines and creating more effective treatments. And we’re just scratching the surface. The next big thing on the horizon? It’s called Quantum Computing. Imagine regular computers as light bulbs—they can be on or off. Quantum computers are more like lasers—they can be on, off, and a whole bunch of other states in between. This gives them the potential to solve problems that are impossible for today’s computers. Breakthroughs like Google’s Willow quantum chip, with its improved coherence and control, promise to unlock computational power previously unimaginable. Collaborative efforts, like those with Servier in France, are pushing the boundaries of what’s possible, exploring how quantum AI can solve complex problems in materials science, chemistry, and beyond.

AI isn’t just changing science; it’s changing the world around us, one innovation at a time. Waymo has already logged millions of passenger trips, proving that self-driving cars aren’t just a futuristic dream—they’re a present-day reality. This isn’t just about convenience; it’s about increasing road safety and accessibility. With 110 new languages added, Google Translate is tearing down communication barriers and building bridges of understanding across cultures. Imagine the impact on education, business, and personal connections. AI is also revolutionizing healthcare, from assisting in cancer research to improving diabetic retinopathy screening (which helps doctors detect eye diseases caused by diabetes). Partnerships like the one with Institute Curry, and work in India and Thailand, are leading to earlier diagnoses, more personalized treatments, and ultimately, better patient outcomes. AI-powered flood forecasts now cover 80 countries, and wildfire mapping provides crucial real-time information, helping communities prepare for and respond to natural disasters. These tools are saving lives and protecting livelihoods. I was particularly struck by the stories shared at the AI Summit, like the one about Max using Gemini to learn about his son’s rare disease. It really brings home how AI can empower individuals and make a profound difference in people’s lives. It’s stories like these that make the potential of AI so incredibly exciting.

“We are at the dawn of a golden age of innovation," - Sundar Pichai

Let’s be real: AI is powerful, and with great power comes great responsibility. Worries about bias, job displacement, and ethical implications are legitimate and must be addressed head-on. But just as we developed safeguards for other transformative technologies, we can – and must – do the same for AI. Responsible AI development is paramount. This means prioritizing fairness, transparency, and accountability. It means embedding ethical considerations into the very design of AI systems. Human oversight is crucial; AI should be a tool that augments human capabilities, not replaces them. Plus, we need to create an environment where innovation can thrive. This requires significant investment in infrastructure, like the billions Google is spending on data centers and AI development. It also means investing in people. Programs like “Grow with Google” and the Global AI Opportunity Fund, which have trained millions of people in digital skills, are essential to ensure that everyone can benefit from the AI revolution. We also need to be clear about AI’s limitations. AI models can sometimes be inaccurate, they are vulnerable to misuse, and the computational power they require can have significant energy implications. We need to be honest about these challenges, take them seriously, and find ways to tackle them head-on. AI should be democratized, meaning access and benefits should be widely shared.

Governments have a strategic role to play in shaping the future of AI. We need aligned policy across countries, striking a delicate balance between mitigating risks and fostering innovation. Overly restrictive regulations could stifle progress, while a laissez-faire approach could lead to unintended consequences. The goal should be to create an environment where AI can flourish responsibly, benefiting all of humanity.

“AI is a profound technology, and it’s important that we develop it responsibly," - Sundar Pichai.

Sundar Pichai, at the AI Summit, spoke eloquently about the “once-in-a-generation opportunity” that AI presents. We are at the dawn of a “golden age of innovation,” and the potential benefits are immense. But we can’t afford to be complacent. Other nations are investing heavily in AI, and we risk falling behind if we don’t act decisively. Just like how people feared search engines would replace libraries in the ’90s (and they didn’t—they just made information more accessible), I believe AI will augment, not replace, human capabilities.

Imagine a future where AI empowers us to not just solve, but vanquish the world’s most pressing challenges – from climate change to disease, once and for all. Imagine a future where personalized education, accessible healthcare, and sustainable energy are available to all. This isn’t just sci-fi anymore; it’s a future within our reach, if we embrace the collaborative potential of human ingenuity and artificial intelligence.

#YouTube Is The New Television

YouTube CEO Neal Mohan’s recent blog post, “Our Big Bets for 2025," isn’t just a corporate update; it’s a super interesting peek into where online video and entertainment are headed. It’s pretty clear that YouTube isn’t just along for the ride; they’re trying to steer the whole darn thing. Let’s break down what these “big bets” tell us about YouTube’s plans and how they might affect, well, everyone.

Mohan’s claim that “YouTube is the epicenter of culture” is spot on, right? From viral dances to breaking news, YouTube’s fingerprints are all over what we watch, share, and talk about. It’s become a massive cultural force, and I’m excited to see how they double down on this in the future. Imagine even cooler tools for spotting and boosting new trends, giving creators an even bigger stage to connect with their fans.

The “YouTubers are the new Hollywood startups” thing is a perfect example of how YouTube’s empowered creators. It’s not just a hobby anymore; making YouTube videos is a real career, with creators building serious businesses and influencing millions of people. YouTube getting this and supporting its creators is key. More tools, resources, and ways to make money will only help this growth, creating a dynamic community of talent and innovation.

The “YouTube is the new television” narrative has been around for a while, but Mohan’s emphasis shows just how much things have changed. With smart TVs everywhere, YouTube’s a major player in our living rooms, going head-to-head with traditional TV. This changes everything, from ads and content to how we even define “television.” And speaking of the living room, let’s not forget YouTube Primetime! Even though their own original shows might have missed the mark, offering live sports like NBA and WNBA games, plus other premium content, makes YouTube a one-stop shop for entertainment. Smart move.

The focus on AI is probably the most interesting “big bet." AI’s already a big part of YouTube’s algorithms and how things work, but the future possibilities are mind-blowing. Think AI-powered video editing, automatic captions and translations making videos more accessible, and even AI-generated content (with some safety nets, hopefully). The potential is huge, and it could totally change how we make and watch videos. Beyond the headlines, the blog post has some cool stats. YouTube’s dominance in podcasts, for example, is surprising but also makes sense. More podcast integration could be a game-changer for creators and listeners. And with more people watching YouTube on their TVs, YouTube’s focus on connected TVs and better streaming is a smart move.

Looking ahead, YouTube’s 2025 vision is ambitious and optimistic. They’re not just keeping up with change; they’re trying to lead the way. By betting on creators, tech, and their role as a cultural hub, YouTube’s setting itself up for a big future in media and entertainment. It’s going to be fun to watch what happens next. And hey, even the comments section is a whole lot better than it used to be, right? (Okay, maybe not always.)

Why I Enjoy My #Pixel (Even Though Everyone Has an iPhone)

I was recently reminded of the perception that people with Android phones are seen as stubborn and non-conforming when someone asked me why I don’t have an iPhone. This happened during an in-person conversation when I used my Pixel to reply to a text. The person simply asked why I didn’t have an iPhone, and I replied that I prefer the phone I have. It seemed so unfamiliar to them. This experience echoed as I watched the Lakers game, noticing the sea of iPhones in the stands. It made me think about how the “blue bubble” of iMessage has become such a status symbol, even though I’ve found my Android integrates perfectly with all the services I use daily.

I remember when I first switched to Android because of its customization options. I love being able to personalize my home screen with widgets and icon packs that reflect my style. It’s not about being different; it’s about having a phone that truly feels like mine. And the variety! There are so many different Android phones available, each with its own strengths. I chose my Pixel because of its camera and its smooth performance, but someone else might prefer a phone with a longer battery life or a larger screen. The point is, there’s an Android phone out there for everyone.

But in the past five years, my preference for Pixel has solidified even further because of its seamless integration with cutting-edge AI. Platforms like Gemini and Perplexity, which are revolutionizing how we interact with technology, work so much better within the Android ecosystem, especially on Pixel devices. It’s not just about the customization anymore; it’s about having access to these powerful tools that enhance productivity and provide a truly intelligent mobile experience. This level of AI integration is a game-changer, and it’s something I wouldn’t want to sacrifice.

Interestingly enough, I almost switched to an iPhone myself this year. When I saw the announcements at WWDC and Apple unveiled Apple Intelligence, I was intrigued. I was almost ready to jump ship when the iPhone 16 came out. However, after the initial excitement subsided, I realized that Apple Intelligence wasn’t quite the finished product it was presented as. It seemed like Apple was, and frankly still is, quite behind the competition in the AI space. Almost a year later, and while they’ve made some progress, Apple Intelligence still lags behind platforms like Gemini on Google Pixel and other Android devices. This solidified my decision to stay with Android.

It’s funny how we’ve come to equate iPhones with “normalcy.” Apple’s marketing has been incredibly effective, creating this image of the iPhone as the must-have device. But I think it’s important to challenge that idea. Choosing Android isn’t about being stubborn; it’s about making an informed decision based on my needs and preferences. It’s about appreciating the open-source nature of the Android ecosystem, which gives me more control over my device and access to a wider range of apps and these powerful AI platforms. And honestly, I’ve never felt like I’m missing out on anything. In fact, I often find myself appreciating the unique features and capabilities of my Android phone, especially its superior AI integration.

What about you? What made you choose your current phone? I’d love to hear your thoughts on the “iPhone norm” and why you prefer your device.

Time Magazine Does Kendrick Lamar's Performance A Service

I’ll spare you into writing up another dissecting blog post, breaking down Kendrick Lamar’s Superbowl halftime performance. I will highlight Time Magazine’s since I think it’s the best comprehensive breakdown review. In its conclusion the writer says the following:

In its framing, narrative approach, and density, Kendrick Lamar’s Super Bowl was unlike any that have come before it. To some, it mystified; to others, it kicked open the door for what this format could be. Just because something is expected of you, he seemed to be saying, doesn’t mean that’s the path you should take. Maybe Kendrick, in his own words, does deserve it all.

If you haven’t watched it, I suggest you do.

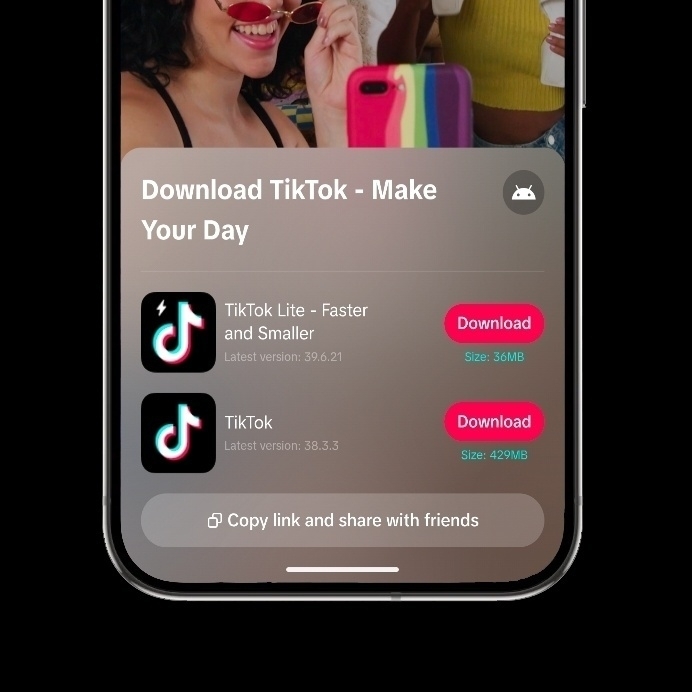

Tiktok Is Still Available on Android

On X, formerly known as Twitter, the TikTok account tweeted that users can still access TikTok by installing it on their website at tiktok.com/download.

We're enhancing ways for our community to continue using TikTok by making Android Package Kits available at https://t.co/JoNVqKpnrS so that our U.S. Android users can download our app and create, discover, and connect on TikTok.

— TikTok Policy (@TikTokPolicy) February 8, 2025

More information at our Help Center: …

Android users are familiar with being able to install APK files to access apps that aren’t in the Google Play Store, though at times it could be risky. As some people like to make harmful APK files to jeopardize Android devices. The good thing is that Google uses Play Protect to protect and scan any applications that are installed on Android devices and alarm you if there’s anything that could be potentially harmful, and that’s even including apps that weren’t installed from the Google Play Store.

I won’t be installing TikTok as I gave up my TikTok usage a few years ago, but for those that are still on The TikTok clock including the creators, this should be great for them.

Google's "Dream Job" Superbowl Ad Score

Three things I love about the Super Bowl, like many others: the game, the halftime performance, and the ads. This year, my excitement peaked with the halftime performance, followed by the game, and then the ads. If you follow me on Mastodon and BlueSky, you’ll see that about 90% of my posts focused on Kendrick Lamar’s halftime performance, with 5% dedicated to the ads and the remaining 5% to the game. Congratulations, Eagles!

I was particularly interested in seeing how others received Google’s “Dream Job” commercial, which I absolutely loved. I’d rate it a “B+,” and I’ll explain why after sharing what others had to say. Here’s the commercial:

Yahoo Sports also gave the ad a “B” grade. Here’s what they had to say about it:

Google using straight-up emotional terrorism to sell phones now, showing a young girl embracing her father as both a child and as a college student. Wicked, but effective.

I get it, but the point is to be effective and it really is. NPR didn’t dish out a grade, but they did a much better job explaining the complexity Google accomplished with this ad.

Typically, an AI, which sounds like a real person coaching you through a hypothetical job interview, might give off serious vibes like HAL 9000 (the killer computer in 2001: A Space Odyssey). But Google’s ad features a father talking about his work experience to the Gemini Live AI chatbot — “I show up every day, no matter what” — while imagery reveals he’s talking about raising his daughter. It adds up to an emotive, touching spot that emphasizes how people can use technology to perform better, rather than depicting a giant corporation offering software that encourages you to depend on them more as every year passes — seeing AI less as a job killer and more like a job search enabler. Hmmm.

The New York Times had this to say about Google’s ad:

The national ad for Google’s Gemini personal assistant is likely to be the most slickly handsome production in the field. If the use of Capra-esque family moments to humanize an A.I.-generated voice that coaches a dad for a job interview completely creeps you out, however, feel free to move this to the bottom of the list.

Oddly enough, NYT’s writer wasn’t too fond for Google’s approach to humanize A.I., but they also named OpenAI’s ChatGPT Ad the best ad of the night. Which to some regard, I understand that OpenAI is the leading maker of all things AI. ChatGPT is the Google Search of AI.

Over on Threads, quite a few people had emotional reactions to Google’s ad. I think this is what Google was going for.

View on Threads

Chris Carley, despite being a Google Pixel customer, is consistently critical of Google. Therefore, his use of the phrase ‘did me dirty’ isn’t unexpected. I admire his dedication to critiquing Google products—a balance I try to maintain in my own feedback. See his post below.

View on Threads

The commercial was generally well-received, and I was impressed. It successfully captured a human and relatable tone, demonstrating Gemini’s practical applications on the Pixel. The subtle approach to Pixel promotion aligns with Android’s focus on user choice (within its own ecosystem). While the ad masterfully evoked human emotion, the sales message felt slightly less developed, which kept it from an ‘A’ grade. Strategically, Google may have prioritized associating human emotion with the brand over direct sales. Perhaps a relatable celebrity could have further amplified this emotional connection. Regardless, I found it highly enjoyable.

Now go rewatch Kendrick Lamar’s halftime performance.

Superbowl Sunday and the Tribes We Belong To

Okay, so today’s Super Bowl Sunday. Big deal, right? Just another excuse to wear a jersey and eat questionable snacks. But seriously, even my church got in on the action. I swear, the place looked like a Cowboys fan club meeting – and as a Commanders fan, I’m contractually obligated to be bitter. But all those jerseys got me thinking…

It’s curious, isn’t it? It’s not just football teams that inspire this kind of devotion. America has its own set of “teams” – not all of them athletic – that people rally behind. Think Cowboys, Yankees, Lakers, sure, but also Apple, and even Taylor Swift. It’s fascinating how we choose these “teams” and how fiercely we defend them.

And let’s be real, this tribalism isn’t just about the big names. It’s in the small things too. Think about the great Android vs. iPhone debate. Green bubbles vs. blue bubbles. It’s a digital divide that separates friends and families (okay, maybe that’s a bit dramatic). But seriously, it’s another example of how we find our tribe and stick with them, even when it comes to something as silly as phone preferences.

Anytime someone steps away from the beloved teams, you’re practically an outcast (just kidding… mostly). But seriously, it does feel like we’re wired to belong, to find our tribe. Whether it’s a sports team, a brand, or even a pop star, we find something to connect with and declare our allegiance. We’re tribal creatures, after all.

This whole jersey-filled Sunday got me wondering, why do we do this? What is it about being part of something bigger than ourselves that’s so appealing? Psychologists have explored this, suggesting it taps into our need for community and identity. Being a fan connects us to something larger, giving us a sense of belonging and shared purpose. It’s like having an extended family of fellow fans. But it goes beyond just feeling good. Our “team” affiliations can influence how we see the world, from what we buy to how we interact with others. Think about it: do you find yourself more drawn to people who share your fandoms? Do you subtly (or not so subtly) judge those who root for the “wrong” team? It’s funny how these seemingly small allegiances can shape our perceptions and even our relationships.

And where do we draw the line between healthy fandom and, well, not-so-healthy tribalism? So, from Cowboys jerseys to green bubbles, it seems we’re always finding new ways to define our tribes. The question is, why? Maybe it’s something to think about as we head into the rest of the week.

Anyway, I got the Chiefs winning, even though I want the Eagles to win.