Category: Android

You are viewing all posts from this category, beginning with the most recent.

My app is coming along! It’s actually really fun crowdsourcing tips and ideas. I really love the Android Dev community! As suggested, I’ll be working on the pages next. Polish will come way later when it’s ready for publishing on the Play Store. 😁

This makes me even more excited about Google I/O!

Chrome at a Crossroads: Antitrust Fallout and the Future of Google's Browser

The tech world is watching closely as the U.S. Department of Justice (DOJ) and Google battle over the future of the internet giant’s search dominance. Following a landmark ruling that found Google illegally maintained a monopoly in online search, the focus has shifted to potential remedies, and one proposal has sent ripples through the industry: forcing Google to sell its ubiquitous Chrome browser.

The Antitrust Verdict and Proposed Fixes

In August 2024, U.S. District Judge Amit Mehta delivered a significant blow to Google, ruling that the company had indeed acted unlawfully to protect its monopoly in the general search market. A key finding centered on Google’s multi-billion dollar agreements with companies like Apple, Mozilla, and various Android device manufacturers to ensure Google Search was the default option, effectively boxing out competitors (CBS News, TechPolicy.Press).

Now, in the ongoing remedy phase of the trial (as of April 2025), the DOJ is arguing for significant structural changes to restore competition. Their most drastic proposal? Requiring Google to divest Chrome, the world’s most popular web browser with billions of users (Mashable). The DOJ contends that Chrome serves as a critical “entry point” for searches (accounting for roughly 35% according to some reports) and that selling it is necessary to level the playing field (CBS News, MLQ.ai). Other proposed remedies include banning Google from paying for default search engine placement and requiring the company to share certain user data with rivals to foster competition.

Potential Suitors Emerge

The possibility of Chrome hitting the market, however remote, has prompted several companies to express interest during court testimony. Executives from legacy search player Yahoo, and newer AI-focused companies OpenAI and Perplexity, have all indicated they would consider purchasing Chrome if Google were forced to sell (Courthouse News Service, India Today).

Yahoo Search’s General Manager, Brian Provost, testified that acquiring Chrome would significantly boost their market share and accelerate their own browser development plans (Tech Times). OpenAI’s Head of Product for ChatGPT, Nick Turley, suggested acquiring Chrome could help “onramp” users towards AI assistants (Courthouse News Service). Perplexity’s Chief Business Officer, Dmitry Shevelenko, stated his belief that Perplexity could operate Chrome effectively without diminishing quality or charging users, despite initial reluctance to testify fearing retribution from Google (The Verge via Reddit).

An Independent Future for Chrome?

While the prospect of established companies like Yahoo or disruptive forces like OpenAI taking over Chrome is intriguing, it raises concerns about simply swapping one dominant player’s control for another. Google, naturally, opposes the divestiture, arguing it would harm users, innovation, and potentially jeopardize the open-source Chromium project that underpins Chrome and many other browsers (Google Blog).

There’s a strong argument to be made that the ideal outcome wouldn’t involve another tech giant acquiring Chrome. Instead, perhaps a company like Perplexity, which is challenging the traditional search paradigm, could be a suitable steward. Even better, envisioning Chrome transitioning to an independent entity, perhaps governed similarly to the non-profit Mozilla Foundation (which oversees Firefox) or maintained purely as an open-source project like Chromium itself, feels like the most pro-competitive and pro-user path. This would prevent the browser – a critical piece of internet infrastructure, especially vital in the Android ecosystem you’re passionate about, Michael – from becoming a tool solely to funnel users into a specific ecosystem, whether it’s Google’s, Microsoft’s, OpenAI’s, or Yahoo’s. An independent Chrome could focus purely on being the best possible browser, fostering true competition and innovation across the web.

The remedy hearings are expected to conclude in the coming weeks, with Judge Mehta likely issuing a decision by late summer 2025. However, Google is expected to appeal any adverse ruling, meaning the final fate of Chrome and the resolution of this antitrust saga could still be years away (PBS NewsHour). Until then, the tech industry, developers, and billions of users worldwide will be watching with anticipation.

The Android Desktop Future is Closer (and More Exciting) Than You Think

Alright, let’s talk about the future of the desktop. For years, we’ve seen mobile and desktop operating systems dance around each other, sometimes borrowing features, sometimes existing in separate silos. But the lines are blurring, and based on recent insights, like the piece over at 9to5Google discussing a next-gen Android desktop experience, the future looks incredibly compelling, especially for Android fans like myself.

The article hits on something crucial: for Android to truly succeed on the desktop, it can’t just be another Windows or macOS clone. It needs to leverage its strengths, particularly in AI with Gemini, to create something fundamentally new – potentially a voice-first, agent-driven experience. Imagine interacting with your desktop naturally, having it understand context whether you’re dictating text or commanding it to find files or browse the web, maybe even without needing a constant hotword. That’s not just iterative; that’s transformative. The potential inclusion of a true, desktop-class Chrome browser is also massive news, addressing a long-standing gap for power users on larger Android screens.

Now, some might be skeptical. Can Android really handle a demanding desktop workflow? I’d argue we’re already seeing strong indicators it can. Look at ChromeOS. For my day-to-day as a Web Developer, it’s become surprisingly robust. I regularly run Linux, manage complex projects on the Acquia Cloud Platform, and even dive into my latest Android app development using Android Studio – all on my HP Dragonfly Pro Chromebook. It handles this multi-faceted workflow remarkably well.

Seeing how capable ChromeOS already is makes me incredibly optimistic about a dedicated, next-generation Android desktop OS built from the ground up for this purpose. If ChromeOS, which shares DNA with Android, can manage my development tasks, imagine a purpose-built Android system infused with powerful AI, seamless voice interaction, and a full-fledged browser. It represents the convergence I’ve been waiting for – the power and flexibility needed for professional work combined with the vibrant app ecosystem and forward-thinking AI integration that Android excels at. The potential for a unified, intelligent computing experience across all devices is palpable, and frankly, I can’t wait to see it unfold.

Here we go!

Pixel 9a is here and I’m putting my main SIM in it. Packing up my P9Pro and putting it on the shelf. I’m going to use the P9a for a few months to see how I like using a low-mid range Android device.

Comment anything you want me to focus on or “test”.

Pixel's Paradox: AI Excellence, Innovation Apathy

I’ll admit, even I raised an eyebrow at the results of this Pixel poll. I mean, 4,700 people? That’s a decent sample size, I suppose. Still, the reasons they cited… well, let’s just say they weren’t exactly groundbreaking. Especially when you consider the iPhone juggernaut – you know, the one that’s seemingly glued to the hands of 50-60% of the US population (and growing, I’m sure). According to Counterpoint Research, as of Q4 2024.. But, I digress. Android Authority asked a simple question: ‘Why do you use a Pixel phone?’ And the answers… were revealing, to say the least.

Back in my day – and yes, I’m going to start this that way – Google’s Pixel line was the underdog. The scrappy kid on the block, fighting against the Samsungs and Apples of the world. They weren’t just selling a phone; they were selling the Android experience. Pure, unadulterated, straight from the source. Remember the Nexus days? (Those were the real Pixels, if you ask me.) Google promised us innovation, a camera that could rival the best, and software that was always one step ahead. And for a while, they delivered. But somewhere along the line – and I’m looking at you, Pixel 6 – things started to… stagnate. The competition caught up, and Google’s ‘exclusive’ features started feeling less ‘exclusive’ and more ‘finally catching up.’

So, what’s the big takeaway from this survey? Apparently, people buy Pixels for a laundry list of reasons: camera, exclusive features, safety, stock Android – the ‘all of the above’ crowd. And look, I get it. There’s a certain appeal to a phone that tries to do everything well. But here’s the thing: that’s not a strength yet, but it has the potential to be. Right now, it feels a bit like a jack-of-all-trades situation. The camera? Solid, reliable – but can it truly claim to be the best anymore? ‘Exclusive’ features? Some are genuinely useful, others… less so. Safety? Absolutely a plus, no argument there. And stock Android? A clean, bloat-free experience is always appreciated. But the question remains: where’s the spark? Where’s the innovation that made the early Pixels so exciting? They’re good, even very good – but they could be so much more.

Let’s be real, the AI features are where Google’s Pixel is still king – or at least, a solid prince. Magic Eraser? Magic Editor? Game changers. I’ve seen Apple’s attempt at a cleanup tool, and frankly, it’s like comparing a Picasso to a finger painting – impressive for a toddler, less so for a trillion-dollar company. And don’t even get me started on call screening and spam detection. Life before these features was like navigating a minefield of robocalls and sketchy texts. Now? It’s like having a personal assistant who actually knows what they’re doing. Then there’s the little things – the ‘Now Playing’ feature that identifies songs without you lifting a finger, the real-time translation that actually works in noisy environments, the way the camera just gets the right shot, even in tricky lighting. These aren’t just gimmicks; they’re genuinely useful AI-powered tools that make your life easier. And while the competition is scrambling to catch up, Google’s Pixel is still setting the pace. (Though, let’s be honest, they could still push the envelope a bit more. Imagine what they could do if they truly leaned into ‘Pixel Sense’ and integrated all those sensors and AI into a seamless, contextual experience…

So, where does this leave us? Pixel, in its current state, is a paradox. It’s a phone that excels at the mundane – the everyday tasks, the little annoyances that AI can smooth over. But it’s also a phone that’s lost its edge, its sense of daring. It’s become… safe. Too safe. And in a market that’s constantly evolving, constantly pushing boundaries, ‘safe’ is a death sentence. Google needs to remember what made Pixel special in the first place – the innovation, the willingness to take risks. They need to stop playing it safe and start pushing the envelope again. Because if they don’t, they’ll find themselves not just trailing behind the competition, but forgotten altogether. And that – that would be a tragedy."

Fitbit App Update Causes Inaccurate Pace and Lap Data, Frustrating Users

A recent update to the Fitbit app appears to have introduced a significant bug affecting how exercise data, specifically pace and lap information, is displayed. Users have taken to the Fitbit community forums to voice their frustration over wildly inaccurate metrics showing up after their workouts.

The issue gained traction last year in a thread titled “Pace/Lap display in Exercise”, where the original poster described a confusing discrepancy:

From Jdmcb66: “I have a Charge 6 and Iphone and within the past few days my laps/pace on my exercise details page has gone crazy. I walked tonight, 2.23 Miles at average 19' pace. The detail at the bottom says Lap 1 - 11'41”/mi = 3.63 miles. I know my laps are set at 1.0 mi. I have gone back and earlier exercises that had lap1, lap 2, etc… now have similar one lap with ludicrous distances and no individual laps. This makes me nuts, as a runner I want to know what my lap times are for training and improvement."

Investigating the forum thread reveals this isn’t an isolated incident affecting only one type of device pairing. Comments pour in from a mix of users, including longtime Fitbit device runners using iPhones and newer users pairing Pixel Watches with Pixel phones. The common denominator is the Fitbit app exhibiting incorrect calculations, consolidating multiple laps into one inaccurate entry and misreporting distances and paces.

This problem was highlighted to me recently within the Pixel Superfan community. One member expressed their disappointment quite directly:

“So the fitbit app is now useless for tracking exercise because they are doing incorrect unit conversions."

For users who rely on detailed lap splits and accurate pace information for training purposes, this bug renders a core function of the Fitbit experience unreliable. While Fitbit moderators have acknowledged the issue and yesterday said for users to update their Fitbit app to the latest update available (4.38 or higher) to receive the fix, but users are still seeing the issue and are eagerly await a fix to restore accurate exercise tracking. For Google to position the Pixel Watch 3 with pace and laptop tracking to be the device for runners, Google needs to get this right before users switch to competing fitness trackers.

Why Google Meet Was Perfect for Setting Up My Parents' Google Voice

I recently did a Google Meet call with my parents to help them set up their Google Voice number system for their Church Ministry and it was such a seamless experience.

Here are a few things I absolutely loved:

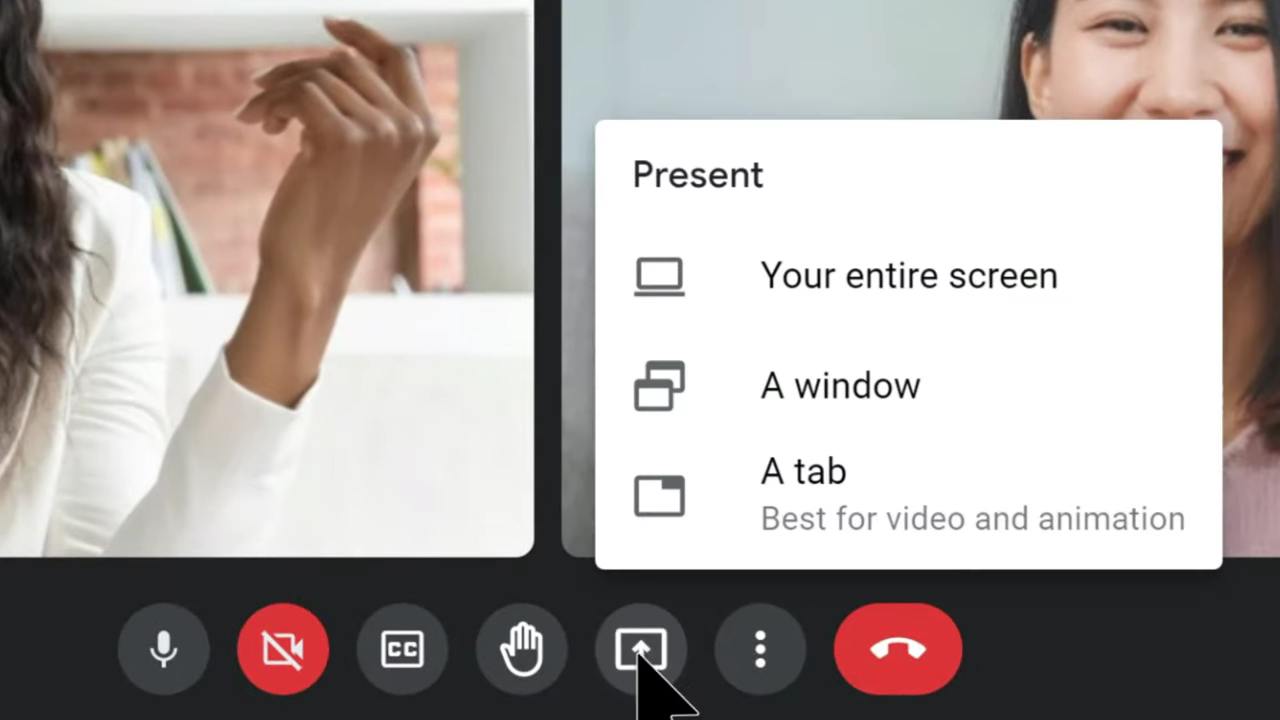

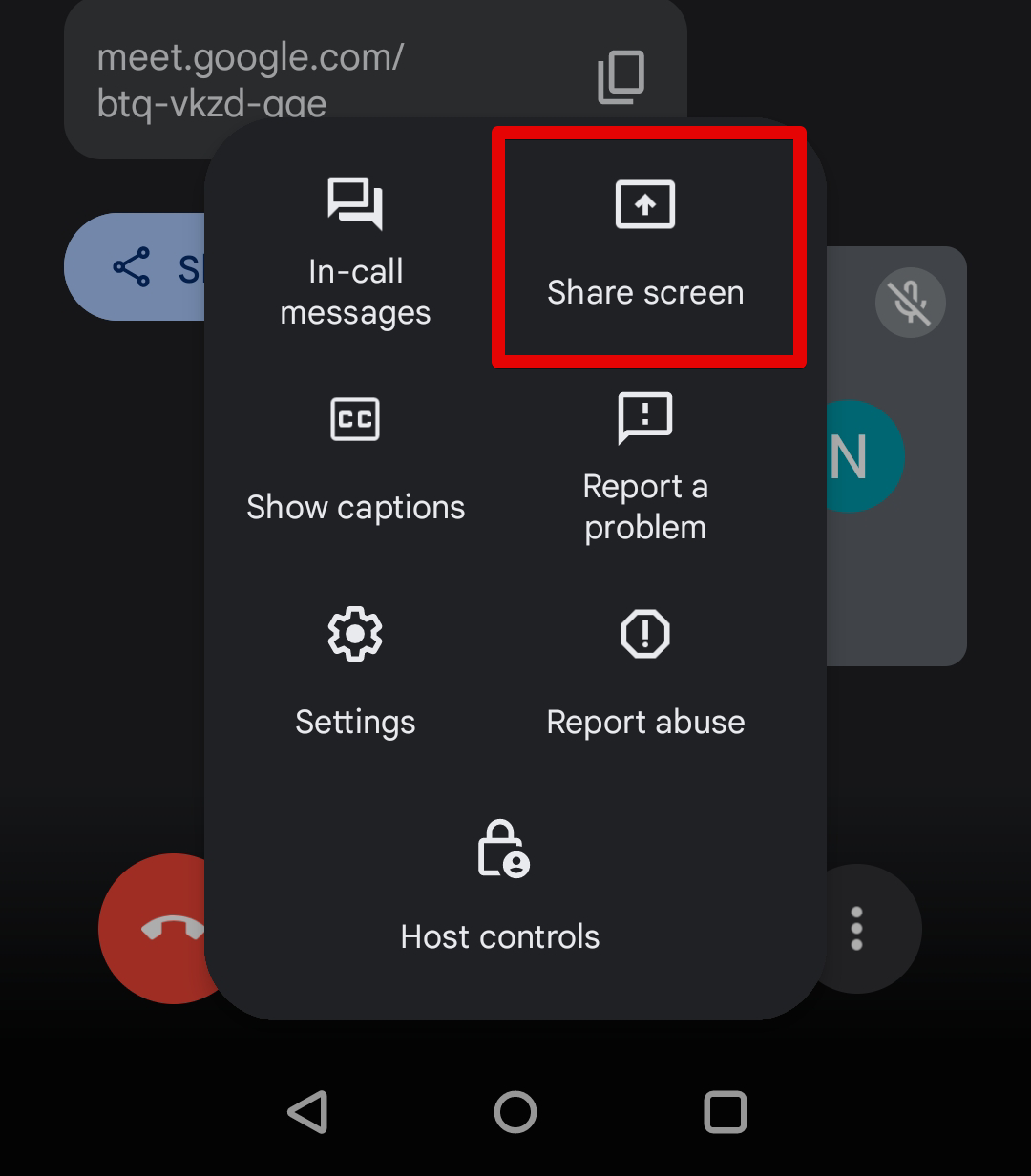

‘Share Screen’ on Google Meet

Being able to see what’s on my parent’s screen to walk them through setting up their Google Voice number worked wonders. I could have just used Chrome Remote Desktop (CRM) and took control of their mouse with their permission to set it up for them, but I wanted them to learn it on their own with my guided instructions. I’ve used CRM in the past and that too works great.

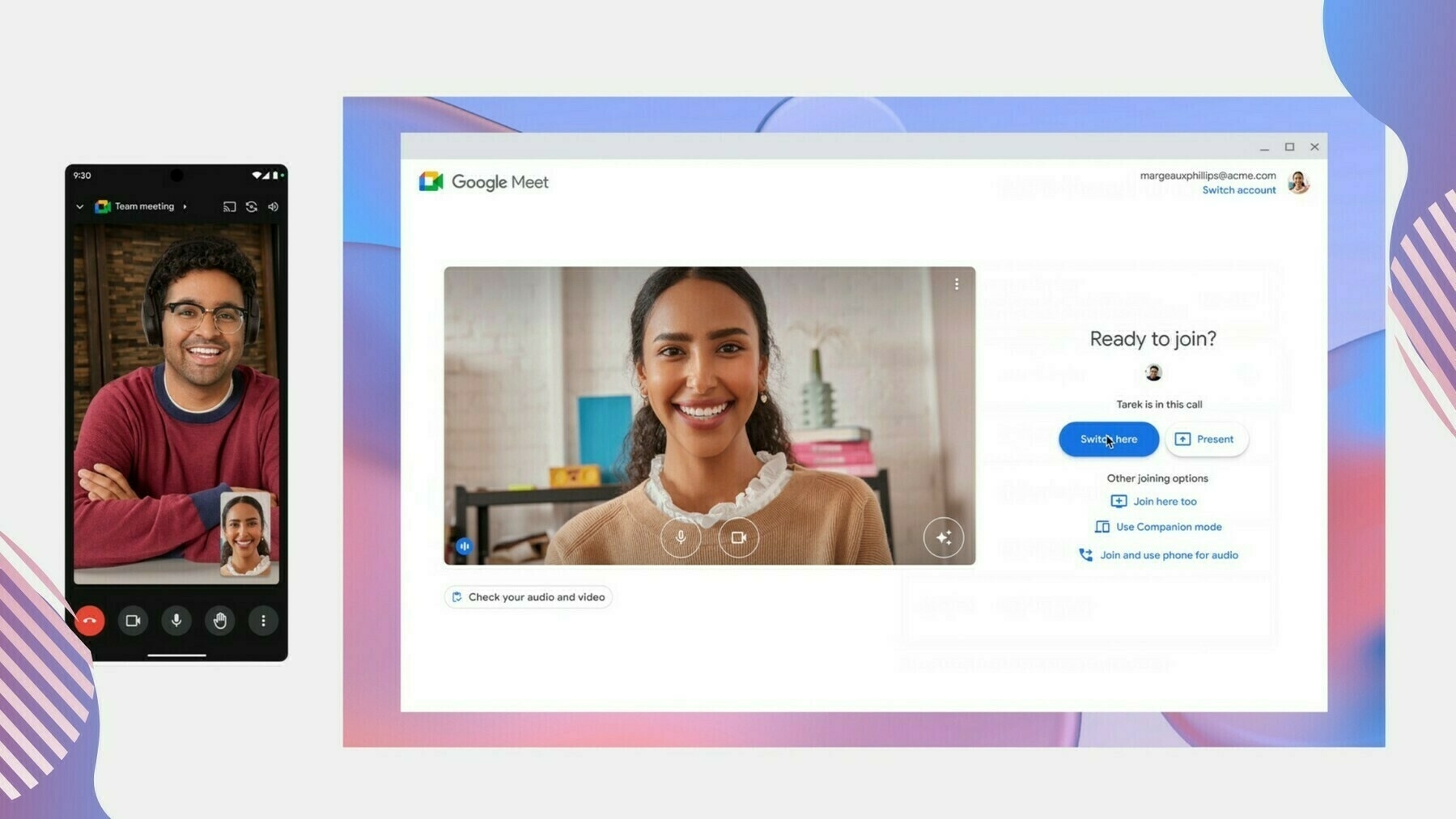

Google Meet ‘Switch Here’

Being able to quickly switch between Chrome and Android devices is so seamless, my 70 year old dad was able to do it. After I walked him through setting up his Google Voice number on the computer, I wanted to walk him through installing Google Voice on his Android phone. I told him to “join the meeting on your phone” and he noticed the “Switch Here” icon. Tapping on that brought him right into the call, quickly removing himself from the call on the computer and adding him back on his phone. That way we could do another Share Screen from his Android phone.

Google’s Material Design Language

Something so subtle, but incredibly intuitive is Google’s cohesive icons and material design language. Because my parents have been using an Android device for at least a decade now, they are familiar with the Google Material Design icons. Telling them to “tap on the settings icon”, “tap on the messages icon” or “tap the menu icon”, they immediately know I’m referring to the “gear icon”, “the message bubble icon”, and the “three dots”.

It’s great experiences like this that let me know I made the right choice in providing my parents with an affordable Chromebook and Android device now that they are retired. They get so much done without having to break the bank with the peace of mind of speed, simplicity, and security with their devices. Especially since they are the envy of their friends because of their Pixel’s “Call Screening” feature.

Decoding Gemini 2.5 - Google DeepMind Signals a Leap in AI Cognition

The world of artificial intelligence keeps moving fast, and Google DeepMind is definitely staying near the front of the pack. News coming out of the research group points toward Gemini 2.5, the next step up from their already impressive Gemini AI models. While we don’t have all the technical details yet, Google is highlighting a big jump in the model’s ability to “think.” This isn’t just about crunching more data or writing longer paragraphs; it seems they’re really focused on making the AI better at reasoning, planning things out, and following complex instructions. For tech watchers and folks like me interested in where this is all going, this focus on cognition is key. It’s about moving AI beyond just recognizing patterns and predicting the next word. The goal is systems that can truly get the intent behind a request, break down tricky problems, and work towards a goal. The potential improvements in Gemini 2.5 could start closing the gap between today’s AI, which handles specific tasks well, and a future AI that acts more like a thinking partner. It’s like the difference between a really fast calculator and someone who can actually formulate a complex strategy; Google looks to be nudging Gemini in that more advanced direction.

Achieving this better reasoning involves refining the underlying AI architecture. We’ll likely see new techniques, maybe related to Chain-of-Thought ideas or better ways for the model to keep track of information internally. This would allow it to hold context longer, check its own work mid-task, and switch tactics if needed when dealing with complicated, multi-part problems. For example, as a developer, I might want an AI to do more than just spit out a code snippet. I could ask it to design a whole feature for a web app – maybe something involving JavaScript and Tailwind CSS on the front end, with PHP handling things in the back, possibly interacting with Drupal – while considering scalability, security, and coding standards.

Current models can stumble over such broad requests, needing a lot of back-and-forth. Gemini 2.5, with its boosted planning and reasoning, aims to understand and tackle these complex instructions more effectively right from the start, which could seriously speed up workflows and open doors for more creative development. Better reasoning also means the AI should get better at handling fuzzy language and understanding subtle meanings, which is crucial for natural interaction. The impact goes way beyond just developer tools; think about smarter information discovery in Search, more helpful automation in Workspace, and richer experiences on Android. That last part is especially interesting to me, given my preference for Android and devices like my Pixel 9 Pro. As Google weaves these smarter models into everything they do, Gemini 2.5’s improved “thinking” could bring real, noticeable benefits, making tech feel more intuitive and powerful. Pulling this off takes massive computing power and research talent, showing Google DeepMind’s significant role in pushing AI forward globally.

Looking closer at what “thinking” means for an AI, the upgrades suggested for Gemini 2.5 go well beyond just knowing facts or writing smoothly. Better reasoning points to a stronger grasp of logic, cause-and-effect, and the ability to spot inconsistencies in information. This could lead to AI systems that not only answer tough questions correctly but can also explain how they got the answer, building more trust. Picture an AI tutor guiding a student step-by-step through a tricky problem, or a research tool comparing conflicting sources and pointing out the differences. The focus on planning and handling multi-step tasks suggests the AI has a better internal sense of the world and can work towards a goal methodically. Instead of just reacting to the last thing said, Gemini 2.5 looks designed to map out a sequence of actions, maybe even predict roadblocks, and stick to a strategy to complete a complex user request. This ability is vital for everything from self-driving systems needing to navigate busy streets to project management software that can break down big objectives into smaller, manageable steps.

In web development, this cognitive sophistication could mean an AI assistant that understands a high-level feature idea, sketches out the necessary front-end (JS, Tailwind) and back-end (PHP, maybe Drupal hooks) pieces, suggests database changes, writes starting code for different modules, and even proposes how to test it all. That’s a much more involved and proactive partner than one just giving isolated code examples. This level of smarts demands serious computational resources, both for training the model and running it, plus clever new algorithms and efficient designs. Google DeepMind is likely using advanced techniques, possibly including sophisticated feedback loops during training that specifically reward good reasoning and planning, along with architectural tweaks for more structured thinking. As an Android fan, the idea of this advanced reasoning running efficiently enough for on-device tasks on future Pixels or other devices is really appealing, potentially enabling next-level Google Assistant features or other integrations that feel truly intelligent without needing the cloud for every little thought. Of course, this increased capability also brings significant responsibility. Google knows the ethical tightrope that comes with powerful AI. Developing Gemini 2.5 surely includes deep safety checks and work on alignment to prevent misuse, reduce bias, and ensure the AI behaves in ways we want. The road to human-like artificial general intelligence is still very long, but steps like Gemini 2.5 are major markers along the way, expanding what machines can understand and do, and bringing us closer to AI that doesn’t just process data, but genuinely “thinks” in ever more useful ways.

Google Shifts Android Development to Fully Private Mode

In a significant move, Google has announced that it will be transitioning to fully private development of the Android operating system after 16 years. This change, confirmed by Google to Android Authority, aims to streamline the development process and reduce discrepancies between public and internal branches.

Previously, Google shared some development work on the public AOSP Gerrit. Now, all development will occur internally. Google has committed to publishing source code to AOSP after each release. While AOSP accepts third-party contributions, Google primarily drives development to ensure Android’s vitality as a platform.

Mishaal Rahman makes one thing clear over on Mastodon:

“Just to be clear: Android is NOT becoming closed source!

Google remains committed to releasing Android source code (during monthly/quarterly releases, etc.) , BUT you won’t be able to scour the AOSP Gerrit for source code changes like you could before.”

This shift will likely have minimal impact on regular users and most developers, including app and platform developers. However, external developers who actively contribute to AOSP will experience reduced insight into Google’s development efforts. Reporters will also have less access to potentially revealing information previously found in AOSP patches. Google has stated that it will announce further details and new documentation on source.android.com.

Dear Google: Let’s Talk About the Pixel (Comments from Reddit)

A 13 year long iPhone customer, finally made the switch from iPhone to the Google Pixel 9 Pro and he shared some great feedback about his experience using Pixel. Some of them are just the difference from being in a closed-ecosystem, controlled by one company and moving to a more open-ecosystem semi-controlled by one company, but with the philosophy of an open-ecosystem. If that makes sense. I provided my comments to the person’s post on reddit. I’ve share them below. The original redditor’s comments are in the green. Mine are in between. Enjoy.

I love all of these suggestions and would welcome all of these, but I’d like to share my thoughts on these. I’ll skip the first one since that was positive feedback.

2. Improve the Tensor Chip

Make the Tensor chip as fast as the top-tier Snapdragon or the latest iPhone’s chip. I’ve noticed some lag, especially when gaming. Sure, 99% of people don’t game heavily on their phones, but when you have an iPhone, you know it can handle it if you want. That’s a feeling of security.

I think this is coming with time. Both Qualcomm and Apple have been making their own chips for more than a decade now. Google partnering with Samsung in their Tensor White Chapel and newer chips was to jumpstart Google’s experience in making their own chips, while still being able to customize the and tweak the AI experience on their own devices. Similar to how Apple learned from Intel before switching to their own silicon. Overall, I think this is coming, but with a caveat. Google will more than likely rely on the combination and collaboration of Cloud computing. So raw power locally won’t always been the focus. Especially since it requires a whole lot more R&D, will eat into margins, and they’ll more than likely raise the price of devices even more than the usual economical increases.

3. Enhance Video Recording

You guys take incredible photos, so keep it up. But please work on getting the video quality closer to iPhone levels—especially when switching lenses mid-recording.

I think you’re right on with this and I think Google is surely advancing here. Even adding their own custom ISP as well as replace “BigWave” (Google’s in-house video codec) with WAVE677DV suggesting that the latter may offer advantages in areas such as performance, power efficiency, or a combination of factors, along with the added benefit of multi-format support on the new Tensor G5 chip coming this fall.

4. Optimize Your Own Apps

Some Google apps are smoother on iPhone than on Pixel. Why is Google Maps smoother on iPhone, for example? Please optimize your apps to perform best on your own phone.

I’ve actually found Google Maps works better on Pixel than it has on iPhone. At least for me in the past. This is obviously anecdotal.

5. Get Third-Party Apps Onboard

Make sure popular social media and messaging apps are optimized for Pixel. This is crucial for everyday use.

I want this to, and Google has worked with IG, Snap, and the YouTube team for a few years and has added some exclusives that aren’t available on iOS. Like Night Sight in Instagram, Live video switching between Pixel’s, and Live Transcribe. Still there is a lot more work that needs to be done and the CameraX API is the key. Fragmentation is the issue here and Google having to balance prioritizing Pixel or Android comes into play.

6. Merge Google Meet & Messages

Consider blending Google Meet and Google Messages into one seamless experience (similar to FaceTime, iMessages). It would simplify communication across Android ecosystem.

From my understanding, it’s the same. Messages and Facetime are two completely different apps. You can uninstall Facetime and still have Messages. I assume that when you can uninstall Messages on iOS, you can still use Facetime. I might not fully understand what you mean here, but I think they are pretty similar. I think WhatsApp, Telegram, and Signal are the only ones that truly have it blended. But, AFAIK Google Meet and Google Messages are to what Apple Facetime and Apple Messages are today in terms as a seamless experience.

7. Add Built-In Magnets

Implement magnets inside the phone (like MagSafe on iPhone). There are tons of accessories that rely on this feature.

I’m not opposed to this, but is it absolutely needed? Most people have a case on their phone. Does the Pixel need magnets if more cases have magnets? I think that’s up for debate. I know a lot of people like to go caseless, but anecdotally, for every caseless courageous person I see in public, I see 10 cas(r)eful cautious people.

8. Strengthen the Ecosystem

One major reason people stick with iPhone is because of Apple’s ecosystem. Web apps are fine, but if possible, create native desktop apps (Messages, video calling, Notes, Reminders, Calendar, Photos, etc.) for both Mac and Windows. That would help lure more people in.

Would native desktop apps do that? Why? To what metric? What benefit do customers get from having a native Messages app as opposed to a Web one? Web apps don’t take up space on the local machine and web apps can be wherever you are. If the features are consistent between the two, I don’t see the want and need for that.

As for the ecosystem, I agree, and that definitely takes a lot more time because Google can’t and probably won’t force any OEM, Developer, etc… to do things that solely benefit Google. Apple can and does do that at the expense of third-party accessory makers and developers.

9. Bolster Core Android Features

Keep improving Android at its core. Make it even more robust, smooth, and user-friendly.

No notes. This is good. Progression is good.

10. Elevate the Watch Experience

The Pixel Watch needs more attention. Aim to match (or beat) the Apple Watch’s functionality, integration, and polish.

I hear this a lot but I don’t think people are ready to spend $700 to $800 on a Pixel Watch. It’s still new and getting as many Pixel Watch experiences out of the door as possibly is more important with the balance of providing premium fitness experiences. I think for Google only having made 3 watches, they’ve done great. Remember, Samsung and Apple have had watch accessory businesses for at least 10 years. That comes with time, but Pixel is advancing at a fast pace. With all that said, I do think the Pixel Watch needs smoother animations and better loading performance. UX journey in and out of apps need to be a lot more polished with animations coming from a specific point on the screen instead of just appearing.

Lastly, a Big One:

Google is known for killing projects quickly, which might be great for engineers, but from a customer’s standpoint, it shows a lack of long-term commitment. When you launch something, it’s hard for us to fully adopt it because we suspect it’ll be discontinued. That attitude does more harm to your reputation than anything else. Please rethink it.

I LOVE this one and totally agree. I think because of the massive backlash and killing of extra weight/messaging apps, Google is at a lean place that seems to be holding on to things as they are today. Google Assistant going away isn’t a killing. It’s an evolution. However, there are times where it makes sense to kill something that just isn’t working or hasn’t been working for years now.

This is great feedback from a long-time iPhone customer. Some things just don’t apply to Android and even Google. I will say, though, that if you expect Google to completely control and force their hand on things like Apple does, you will be disappointed.