Category: Longform

You are viewing all posts from this category, beginning with the most recent.

Decoding Google's Linux Terminal for Android: It's ChromeOS All Over Again (and That's Great!) + My Wishlist for Killer Apps & Future Hardware

I want to break down this interesting comment from a Google employee about the upcoming Linux terminal on Android.

The main purpose of this Linux terminal feature is to bring more apps (Linux apps/tools/games) into Android, but NOT to bring yet another desktop environment. Android, as speculated by the public, will have better desktop-class windowing system. We think it would in general be bad to present multiple options for the window management on a single device. Ideally, when in the desktop window mode, Linux apps shall be rendered on windows just like with other native Android apps.

This however doesn’t mean that we prohibit the installation of any Linux desktop management system (xfce, gnome, etc.) in the VM. I just mean that those won’t be provided as the default experience as you would expect. But, because Android is an open-source project, I wouldn’t be surprised if there will be any device maker who ships such a Linux desktop management system by default.

And GPU acceleration is something we are preparing for the next release. Stay tuned! :)

As a long-time ChromeOS user myself, I’m personally buzzing with excitement, because this isn’t just about a command line; it’s a potential paradigm shift for Android’s capabilities. What exactly are we talking about? You can find more background details about this upcoming terminal in this Android Authority article from THE Mishaal Rahman. Seriously, stop reading this and go read that and come back.

The key takeaway is right in the first line: “The main purpose of this Linux terminal feature is to bring more apps (Linux apps/tools/games) into Android, but NOT to bring yet another desktop environment." Think of it like this: Google isn’t trying to turn your phone into a full-blown Linux desktop in your pocket (at least, not directly). They’re aiming to unlock a vast library of existing Linux software and make it available to Android users. Imagine running powerful command-line tools, niche utilities, or even Linux-exclusive games directly on your Android device. But the implications run deeper than just running a few extra apps.

The comment then dips into the “windowing system” on Android and what that should look like. The Google employee says Android should have a better desktop class windowing system. Essentially, if you’re using Android in a desktop mode (think plugged into a monitor, with a keyboard and mouse), Linux apps will behave like native Android apps, fitting seamlessly into the existing window management system. This tells us a few things: Google is definitely thinking about desktop mode on Android, and they’re prioritizing a consistent user experience. This brings us to Google’s official position.

The next section is crucial: ”…those won’t be provided as the default experience as you would expect. But, because Android is an open-source project, I wouldn’t be surprised if there will be any device maker who ships such a Linux desktop management system by default." This is Google walking a tightrope. They’re not officially supporting a full Linux desktop environment (like XFCE or Gnome) out-of-the-box. They’re leaving the door wide open for device manufacturers (like Samsung, Xiaomi, etc.) to build their own implementations on top of the foundation they’re providing, setting the stage for innovation.

If you want a true Linux desktop experience on your Android device, you probably won’t get it straight from Google, but expect some clever companies shipping devices with custom Android builds that integrate a full desktop environment. If this sounds familiar, it’s because this strategy mirrors Google’s approach to integrating Linux into ChromeOS. Chromebooks have had Linux support (via a container called Crostini) for years, and the experience is remarkably similar to what the Google employee is describing for Android.

On ChromeOS, Linux applications run in a container and are displayed as regular windows alongside Chrome apps. The underlying Linux environment is there, accessible via the terminal, but it’s not the default user experience. ChromeOS retains its primary focus, while Linux provides a powerful expansion of its capabilities. This is a good thing for a number of reasons.

The ChromeOS implementation has been largely successful, and applying that experience to Android is a smart move. A proven model helps with everything from security and isolation to minimal impact on the core OS, and finally flexibility. All of these things lead to the most exciting tidbit.

The final, and arguably most exciting, tidbit: “And GPU acceleration is something we are preparing for the next release. Stay tuned! :)" This means that Linux apps running on Android will be able to leverage the device’s GPU for improved performance. Think smoother graphics, faster processing, and the possibility of running more demanding applications and even gaming. GPU acceleration is available for Crostini on ChromeOS and has massively improved the performance and usability of Linux apps that require them. What does this actually mean for users?

Based on my own ChromeOS experience, Linux apps that are likely to be game-changers on Android cover a few universes: Web Development Stacks, Code Editors, Image and Video Editors, Command-Line Tools, Retro Gaming Emulators.

Having these opened up on Android would be incredible. I can personally attest to the improved workflow that Linux applications on ChromeOS bring, but that is one part of what this upgrade can bring.

It’s is incredibly exciting, but it also highlights the need for more powerful ARM-based hardware in both the Android and ChromeOS ecosystems. I’m really hoping that chipmakers like Qualcomm start bringing their Snapdragon X Elite series of chips (or similar performance-class processors) to Chromebooks as well. Imagine a ChromeOS device with the performance and efficiency of those new ARM chips – it would be a true game-changer for running demanding Linux applications! The Snapdragon X Elite is showing so much potential on Windows, and it would be amazing if Chromebooks could leverage that same power. The more powerful the chip is in these devices, the better these apps will be!

I’m really looking forward to Android’s Linux integration, and I’m confident that it will unlock a whole new world of possibilities for mobile productivity and creativity.

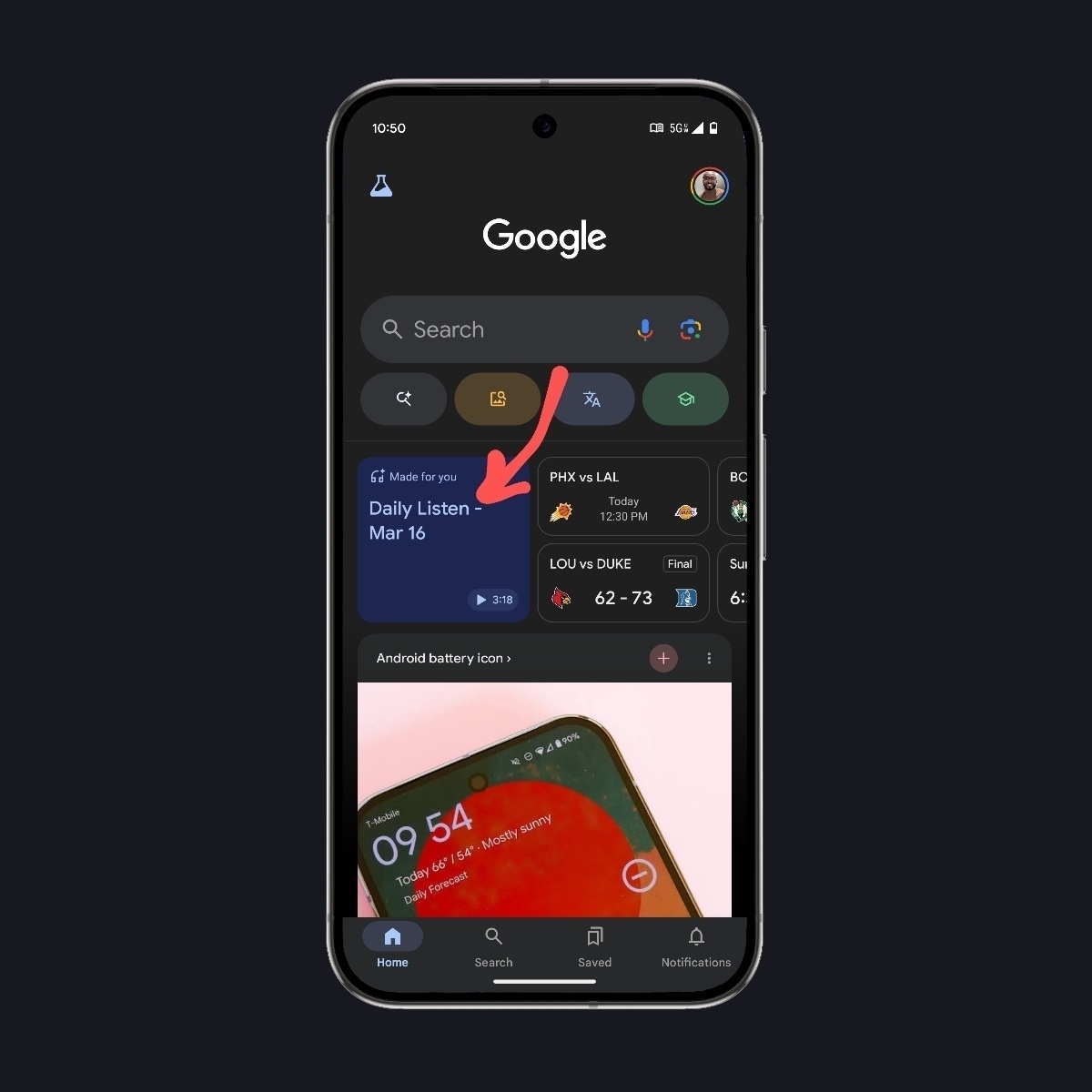

Google's "Daily Listen": Your Discover Feed, Now in Audio

Google’s “Daily Listen”: Your Discover Feed, Now in Audio

Google is constantly experimenting with ways to make information more accessible and digestible. The latest foray into this arena comes in the form of “Daily Listen,” an experimental feature tucked away in Google Discover within Google’s Search Labs. Imagine transforming your personalized Discover feed into a short, easily consumable audio podcast – that’s the core concept behind Daily Listen.

Here’s a quick breakdown of “What it is”, “How it works”, and “How Pixel customers can use it”:

* What it is:

* It’s an AI-powered audio experience that provides a brief, roughly five-minute summary of the topics you’re interested in, based directly on your Google Discover feed. Think of it as a personalized news bulletin curated by your own browsing habits.

* Essentially, it’s an audio version of your Discover feed, perfect for catching up on the go, during your commute, or while multitasking. I’ve personally found that these small, bite-sized, consumable podcasts are incredibly useful. Services like “Daily Listen” fulfill that niche of providing just enough information without the fluff that often bogs down longer-form content. Look at the success of podcasts like TechMeme Ride Home and even the long-running “MacOS Ken” - concise, informative briefings are a real win.

* How it works:

* It uses AI to gather and summarize content from your Discover feed, creating a personalized podcast episode. The magic goes deeper than simple text-to-speech. The AI is likely leveraging techniques like:

* Natural Language Processing (NLP): To understand the core themes and key arguments within the articles in your feed.

* Summarization Algorithms: To condense longer articles into concise and digestible snippets, capturing the essence of the information.

* Topic Modeling: To identify recurring themes and group related content, ensuring the audio episode is coherent and well-structured.

* Voice Cloning or Text-to-Speech (TTS) with Style Transfer: Although not confirmed, it is possible Google is experimenting with using voice cloning to produce more natural sounding “Daily Listen” content, potentially with subtle inflections based on the tone of the original article. Another possibility is employing style transfer to adjust the TTS’s pitch and tone for a more human-like reading, instead of a robotic one.

* The content selection is based on your search history and interactions with the Discover feed. This means the algorithm considers:

* Keywords in your searches: What you’ve actively looked for provides explicit signals about your interests.

* Websites you frequently visit: If you’re a regular reader of a particular news site or blog, content from that source is more likely to appear.

* Topics you’ve engaged with in Discover: Articles you’ve clicked on, shared, or saved are strong indicators of your preferences.

* Feedback you’ve provided: Clicking “More like this” or “Less like this” on Discover cards directly influences future content recommendations.

* Technical Analysis Considerations: The back-end likely utilizes a combination of Google’s existing AI infrastructure, possibly leveraging TensorFlow or similar frameworks. The system would need to continuously monitor and update your profile of interests in real-time, re-running the summarization and aggregation algorithms each time a new Daily Listen is generated. This necessitates significant computational power and optimized algorithms to deliver the five-minute summary efficiently. Furthermore, the system would require efficient caching mechanisms to prevent redundant processing for users with relatively stable browsing habits.

* How Pixel customers can use it:

* First, users must opt-in to Search Labs within the Google app. Open the Google app, tap your profile picture, go to “Settings,” then “Search Labs,” and enable “Daily Listen.”

* Once enabled, a “Daily Listen” card will appear in the Discover feed. The appearance and frequency of this card may vary depending on the availability of relevant content and the algorithm’s confidence in its relevance to your interests.

* Tapping the card generates the personalized audio episode. The AI then springs into action, compiling and narrating the audio summary.

* The user will then have audio control functions, such as pause, rewind, and fast forward, allowing for a user-friendly and adaptable listening experience.

Daily Listen and the Potential Connection to Notebook LM:

While not explicitly stated, it’s intriguing to consider the potential relationship between Daily Listen and Google’s Notebook LM (formerly Project Tailwind). Notebook LM is Google’s AI-powered note-taking tool that summarizes, analyzes, and generates insights from uploaded documents. The core summarization technology powering Daily Listen could be a direct application, or at least a close relative, of the same AI models used in Notebook LM.

Here’s why:

* Shared Summarization Capabilities: Both Daily Listen and Notebook LM rely heavily on AI-powered summarization. The same algorithms that condense lengthy research papers in Notebook LM could be adapted to summarize news articles in Daily Listen.

* Understanding Context and Theme: Notebook LM is designed to understand the context and key themes within complex documents. This understanding is crucial for creating accurate and informative summaries, a skill directly applicable to the Daily Listen feature.

* Potential for Personalized Learning: Imagine a future where you can upload your own articles, research papers, or meeting notes to Notebook LM, then have Daily Listen create a personalized audio summary of your content. This could be a powerful tool for learning, staying organized, and retaining information.

Google’s “Daily Listen” is more than just a fun experimental feature; it represents a powerful application of AI to personalize information consumption. Still in its early stages, the underlying technology, potentially connected to advancements in tools like Notebook LM, suggests a future where AI-powered audio summaries become a commonplace way to stay informed and engaged.

Google Store Santa Monica: A Pixel Experience Opens its Door

The Google Store has opened its doors in Santa Monica, bringing a new space for innovation and the Google community. A select group of Pixel Superfans received an exclusive preview before the official opening. This special event included an opportunity to meet members of the Pixel team and Ivy Ross, Chief Design Officer for Consumer Devices at Google. The Superfans were able to explore the store’s displays and experience the devices firsthand.

“It was interesting to see the people behind the products," shared a fellow Pixel Superfan. “Meeting Ivy Ross provided insight into the Pixel’s design philosophy."

Google expressed appreciation for their Pixel Superfans, acknowledging their contribution to the Pixel’s development. A contest was also held, offering Superfans the chance to win additional Google and Pixel merchandise. The Google Store in Santa Monica aims to be a community space, offering learning opportunities and showcasing Google’s innovations featuring interactive workshops and personalized support.

Located in Santa Monica, the Google Store is open to the public. Visitors can explore the latest technology and learn more about Google’s products.

Google Please Do This At Google I/O 🙏🏾

John Gruber provides a genius script for what Google should do at Google I/O 2025:

Presenter: This is a live demo, on my Pixel 9. I need to pick my mom up at the airport and she sent me an email with her flight information. [Invokes Gemini on phone in hand…] Gemini, when is my mom’s flight landing?

Gemini: Your mom’s flight is on time, and arriving at SFO at 11:30.

Presenter: I don’t always remember to add things to my calendar, and so I love that Gemini can help me keep track of plans that I’ve made in casual conversation, like this lunch reservation my mom mentioned in a text. [Invokes Gemini…] What’s our lunch plan?

Gemini: You’re having lunch at Waterbar at 12:30.

Presenter: How long will it take us to get there from the airport?

People have been laying it on thick on Apple for their massive misstep of Apple Intelligence marketing a product that was never ready to sell another product. As John Gruber said in his blog post, Google should do a live demo of the exact thing that Apple said Siri would be able to do in front of a live audience in a live stream to truly prove that Gemini and Google Pixel are great products and a match made in tech heaven.

As John Gruber said, Apple just handed Google a potential marketing gift.

Google's Gemini Transition: A Necessary Step, But Execution is Key

Honestly, I wasn’t sure what Google’s long-term plans were for Assistant. Given their history with sunsetting projects, there was a bit of skepticism. But then came Gemini, and the shift is happening. Let’s see how it unfolds.

Google said in a blog post ‘millions’ of users have transitioned to Gemini. While this is a positive sign, the scale within the broader Android user base is something to consider. The focus on ‘most requested features’ like music and timers highlights the practical aspects of the update. The potential for ‘free-flowing, multimodal conversations’ and ‘deep research’ remains an area of interest. The introduction of features like Gemini 2.0 flash thinking and ‘Memory’ adds to the evolving capabilities of the platform. AI development is complex, and balancing innovation with timely delivery is a challenge.

The AI revolution, sparked by OpenAI, has certainly changed the landscape. While Apple has hinted at contextually intelligent assistants and fumbled so far, Google’s approach with Gemini 2.0, Android, and Gemini Nano suggests a comprehensive strategy. They have the AI capabilities, the software, and the hardware, which is a significant advantage. The timeline for execution is a point of interest. Google’s announcements at I/O, including Project Astra, initially suggested a year-end rollout. The current mid-year update indicates a delay. While delays are common in tech, timely execution is always preferred.

Ultimately, Google’s move to replace Assistant with Gemini is a necessary step in the age of AI. They have the pieces, but the execution will determine their success. If they can deliver on the promise of a truly intelligent and helpful assistant, they could redefine how we interact with our devices. But if they stumble, they risk falling behind in a rapidly evolving market. The next few months will be crucial.

Google's Grand Experiment: From Energy to Ecosystem—A 13-Year Observation

Thirteen years ago, as a GeekSquad Advanced Repair Agent, I saw Chromebooks for what they were: cheap, $200 laptops with a measly 16GB of storage. Thin clients, the IT crowd called them. I called them underwhelming. Many other’s thought the same. Back then, Google’s cloud ambitions manifested as these bare-bones machines—a far cry from the integrated ecosystems I was used to, dominated by Macs and PCs. I knew Google made Android and those software services—Search, Docs, Sheets, Slides—they were fine. But the big picture? I missed it. Google didn’t build hardware like Apple did. To me, they were just… energy. Pure potential, no form. Steve Jobs said computers are a bicycle for the mind. Google was the kinetic energy pushing the bike, not the bike itself. In this analysis, I want to trace Google’s evolution from that pure energy to a company building the bike, the road, the pedals—even the rider.

The shift in my perspective came when I grasped the consumer side of cloud computing—servers, racks, the whole ‘someone else’s computer’ spiel. Suddenly, Chromebooks started to make sense. Cost-effective, they said. All the heavy lifting on Google’s servers, they said. Naïve as I was, I hadn’t yet fully registered Google’s underlying ad-driven empire, the real reason behind the Chromebook push. That revelation led me to a stint as a regional Chromebook rep. A role masquerading as tech, but really, it was sales with a side of jargon. The training retreat? Let’s call it an indoctrination session. The ‘Moonshot Thinking’ video from Google [X]—all inspiration, no product—was the hook. Suddenly, streaming movies and collaborative docs weren’t just features, they were visions. ‘Moonshot thinking,’ I told myself, swallowing the Kool-Aid. Cloud computing, in that moment, seemed revolutionary. I even had ‘office hours’ with Docs project managers, peppering them with questions about real-time collaboration. ‘What if someone pastes the same URL?’ I asked, probably driving them nuts. But I was hooked. Cloud computing, I thought, was the future—or so they wanted me to believe.

That journey, from wide-eyed newbie to… well, slightly less wide-eyed observer, has taught me one thing: Google’s Achilles' heel is execution. They’ve got the vision, the talent, the sheer audacity—but putting it all together? That’s where they stumble. Only in the last three years have they even attempted to wrangle their disparate hardware, software, cloud, and AI efforts into a coherent whole. Too little, too late? Perhaps. Look at the Pixel team: a frantic scramble to catch up, complete with a Jobsian purge of the ‘unpassionate.' Rick Osterloh, a charming and knowledgeable figurehead, no doubt—but is he a ruthless enough leader? That’s the question. He’s managed to corral the Platform and Services division. Yet, the ecosystem still feels… scattered. The Pixel hardware, for all its promise, still reeks of a ‘side project’—a lavish, expensive, and perpetually unfinished side project. The pieces are there, scattered across the table. Can Google finally assemble the puzzle, or will they forever be a company of impressive parts, but no cohesive whole?

After over a decade of observing Google’s trajectory, certain patterns emerge. Chromebooks (bless their budget-friendly hearts), for instance, have settled comfortably into the budget lane: affordable laptops for grade schoolers and retirees. Hardly the ‘sexy’ category Apple’s M-series or those Windows CoPilot ARM machines occupy, is it? Google’s Nest, meanwhile, envisioned ambient computing years ago. Yet, Amazon’s Alexa+ seems to be delivering on that promise while Google’s vision gathers dust. And let’s talk apps: Google’s own, some of the most popular on both Android and iOS, often perform better on iOS. Yes, that’s changing—slowly. And the messaging app graveyard? Overblown, some say. I say, try herding a family group chat through Google’s ever-shifting messaging landscape. Musical chairs, indeed. But, credit where it’s due, Google Messages is finally showing some long-term commitment. Perhaps the ghosts of Hangouts and Allo are finally resting in peace.

The long view, after thirteen years of observing Google’s sprawling ambitions, reveals a complex picture: immense potential, yet a frustrating pattern of fragmented execution. They’ve built impressive pieces—the AI, the cloud, the hardware—but the promised cohesive ecosystem has remained elusive. Whispers of “Pixel Sense,” Google’s rumored answer to true AI integration, offer a glimmer of hope. (And let’s be clear, these are just rumors—I’m not grading on a curve here.) But, after years of watching disjointed efforts, I find myself cautiously optimistic about the direction Rick Osterloh (knowledgeable, and, some might say, charming) and his newly unified Platform and Services division are taking. There’s a sense that, finally, the pieces might be coming together. The vision of a seamlessly integrated Google experience—hardware, software, AI, and cloud—is tantalizingly close. Will they finally deliver? Or will Google continue to be a company of impressive tech demos and unfulfilled promises? Time will tell. But for the first time in a long time, I’m willing to entertain the possibility that Google might just pull it off.

Pixel 10 Leaks

Pixel 10 series leaks are here, brought us by @OnLeaks on behalf of Android Headlines:

The Pixel 10 series will be the first to use the new Tensor G5 which is expected to be manufactured by TSMC. Google had previously been using Samsung Foundry to manufacture their chipsets for Pixel, and it showed. Google had problems with overheating, the processor was pretty slow, and the modem was bad too. However, Google did fix the modem and mostly fixed the overheating issues with the Tensor G4 on the Pixel 9 series.

We are expecting some big gains in terms of performance on the Tensor G5, however, we also have to keep in mind that this chipset is being built specifically for the Pixel. Google is going to prefer AI performance over raw performance.

The Tensor G5 seems to be the main focus on the Pixel 10 this year. Since the external design doesn’t seem to be changing much, besides adding an additional speaker grill to the bottom of the device shown in the CAD renders, internal hardware, performance, and software could be where we get the most refinement. I’m perfectly fine with Google not changing the design this year. If the Pixel 9 is the design they’ll sit on for a couple of year (read as 3), I’m happy with that. Pixel 9 series is beautiful.

However, I had a conversation with one of my IRL friends about some nice things Google should add to the Pixel 10. I’d welcome all of them as long as it doesn’t raise the price by much. Maybe $50 would let me depart with my hard-earned money. Anything north of that, I might be holding onto my Pixel 9 Pro.

Google Store Oakbrook Customer Service

A fellow Pixel Superfan had a great experience at the Oakbrook Google Store and he thought he’d share:

Just thought it worth sharing, my mom’s

Pixel 7awas having battery issues, I did a chat with support where they told me it wasn’t under warranty, went to the store and they did a special warranty replacement given the battery issues that you can find all over the web. 2 hrs later resolved. Especially great considering she got this phone on my family’sFiplan with a promo that is still on going for2yrs.While there they also fixed my charging issue on my

Pixel 5by using effectively a dentist/surgical pick to pull the pile of lint out.Just thought the good service was worth sharing with the community. ❤️

It’s nice hearing success stories like this. Especially when the Google Store employees go above and beyond what is even asked of them. I hear this happens a lot. It makes people want to go into the store’s instead of dealing with a contractor online. Still, Google’s been doing a much better job at resolving issues at third-party repair shops as well.

Material You and i...OS

Reports are that Apple is bringing a radically new design to iOS with iOS 19.

Mark Gurman, on Bloomberg’s Power Up

“The revamp — due later this year — will fundamentally change the look of the operating systems and make Apple’s various software platforms more consistent, according to people familiar with the effort. That includes updating the style of icons, menus, apps, windows and system buttons.

As part of the push, the company is working to simplify the way users navigate and control their devices, said the people, who asked not to be identified because the project hasn’t been announced. The design is loosely based on the Vision Pro’s software”

Designer Eli Johnson made a brilliant concept of what that iOS redesign could look like based off of the design of the current Apple Photos, Sports, and Invites app that was recently released. Even Jon Prosser, popular Apple leaker, on Front Page Tech leaked a vision of what he saw as the new Camera app design on iOS 19.

Personally, I welcome it and I’m looking forward to this new era of UI from Apple because it’s a long time coming.

Since I’ve been working on my own app for Android, I’ve been paying more attention to the front end app designs. I’m sure it’s not a surprise, but though I do like Apple’s new glass-like visionOS and what Apple plans to do with their OSes across the Apple space, I’ve realized how much I enjoy Google’s joyful and human Material Design.

Aside from material design not compromising form or function, it just feels more joyful and human to use compared to Apple’s more cold, yet utopian sci-fi leaning design.

Siri, You're Breaking Our Hearts (and Our Settings)

It seems Apple’s AI woes continue. Just when we thought things couldn’t get more embarrassing than delaying “Apple Intelligence,” we get this gem. John Gruber, over on Mastodon, shared a screenshot of his recent conversation with Siri, and let’s just say it’s not a good look:

MacOS 15.3.1. Asked Siri “How do you turn off suggestions in Messages?”

Siri responds with instructions that:

(a) Tell me to go to a System Settings panel that doesn’t exist. There is no “Siri & Spotlight”. There is “Apple Intelligence & Siri" and a separate one for “Spotlight”.

(b) Are for Mail, not Messages, even though I asked about Messages, and Siri’s own response starts with “To turn off Siri suggestions in Messages”

Gruber simply asked Siri how to turn off suggestions in Messages on his MacOS 15.3.1. Siri, in its infinite wisdom, first sent him on a wild goose chase to a non-existent “Siri & Spotlight” panel in System Settings. Then, it proceeded to give him instructions for Mail, not Messages!

This is beyond a simple bug; it’s a fundamental failure in understanding basic user requests. And remember, this is the same Siri that Apple wants us to believe is the foundation for their upcoming “Apple Intelligence” revolution.

Gruber himself pointed out the irony, highlighting how Apple touted “product knowledge” as a key feature of their AI, yet Siri can’t even navigate its own settings.

“Product knowledge” is one of the Apple Intelligence Siri features that, in its statement yesterday, Apple touted as a success. But what I asked here is a very simple question, about an Apple Intelligence feature in one of Apple’s most-used apps, and it turns out Siri doesn’t even know the names of its own settings panels.

It’s becoming increasingly clear that Apple’s AI ambitions have outpaced their reality. This latest Siri stumble, coupled with the “Apple Intelligence” delay, paints a picture of a company struggling to keep up in the AI race.

Perhaps it’s time for Apple to take a step back, focus on getting the basics right, and then, maybe then, they can start talking about revolutionizing our AI experience.